The important thing to creating versatile machine studying fashions able to considering like individuals might not be to feed them giant quantities of coaching knowledge. As a substitute, a brand new research suggests it might go additional how They’ve been educated. These findings might be an enormous step towards higher, much less error-prone AI fashions, and will assist make clear the secrets and techniques of how AI programs and people be taught.

People are the grasp mix-makers. When individuals perceive the relationships between a gaggle of components, similar to meals components, we will incorporate them into all types of scrumptious recipes. Utilizing language, we will decode sentences that we now have by no means encountered earlier than and compose complicated and inventive responses as a result of we perceive the fundamental meanings of phrases and grammar guidelines. Technically, these two examples are proof of “constructivism” or “systematic generalization” – typically considered as a basic precept of human cognition. “I feel that is crucial definition of intelligence,” says Paul Smolensky, a cognitive scientist at Johns Hopkins College. “You possibly can go from figuring out the components to coping with the entire.”

True syntheticity could also be a basic part of the human thoughts, however machine studying builders have struggled for many years to show that AI programs can obtain it. a Hajja is 35 years old Developed by late philosophers and cognitive scientists, Jerry Fodor and Zenon Pylyshyn counsel that this precept could also be out of attain for traditional neural networks. At the moment’s generative AI fashions can mimic synthetics, producing human responses to written prompts. Nonetheless, much more superior fashions, together with OpenAI’s GPT-3 and GPT-4, Still short Some standards for this potential. For instance, when you ask ChatGPT a query, it might initially present the proper reply. Nonetheless, when you preserve sending him follow-up inquiries, he might fail to remain on matter or begin contradicting himself. This implies that though fashions can regurgitate data from their coaching knowledge, they don’t actually perceive the that means and intent behind the sentences they produce.

However a brand new coaching protocol centered on shaping how neural networks be taught might increase an AI mannequin’s potential to interpret data the best way people do, in response to a research printed Wednesday within the journal nature. The outcomes counsel {that a} specific method to instructing AI could also be efficient Create synthetic machine learning models It’s simply as generalizable as individuals, at the least in some circumstances.

“This analysis opens up essential views,” says Smolensky, who was not concerned within the research. “It achieves one thing we needed to attain and had by no means succeeded earlier than.”

To coach a system that appeared able to recombining parts and understanding the that means of recent and sophisticated expressions, researchers didn’t should construct an AI system from scratch. “We did not want to alter the structure radically,” says Brenden Lake, the research’s lead creator and a computational cognitive scientist at New York College. “We simply needed to apply it.” The researchers began with an ordinary adapter mannequin, one which represents the identical sort of AI scaffolding that powers ChatGPT and Google’s Bard however lacks any prior script coaching. They ran that primary neural community by a set of duties particularly designed to show this system interpret a made-up language.

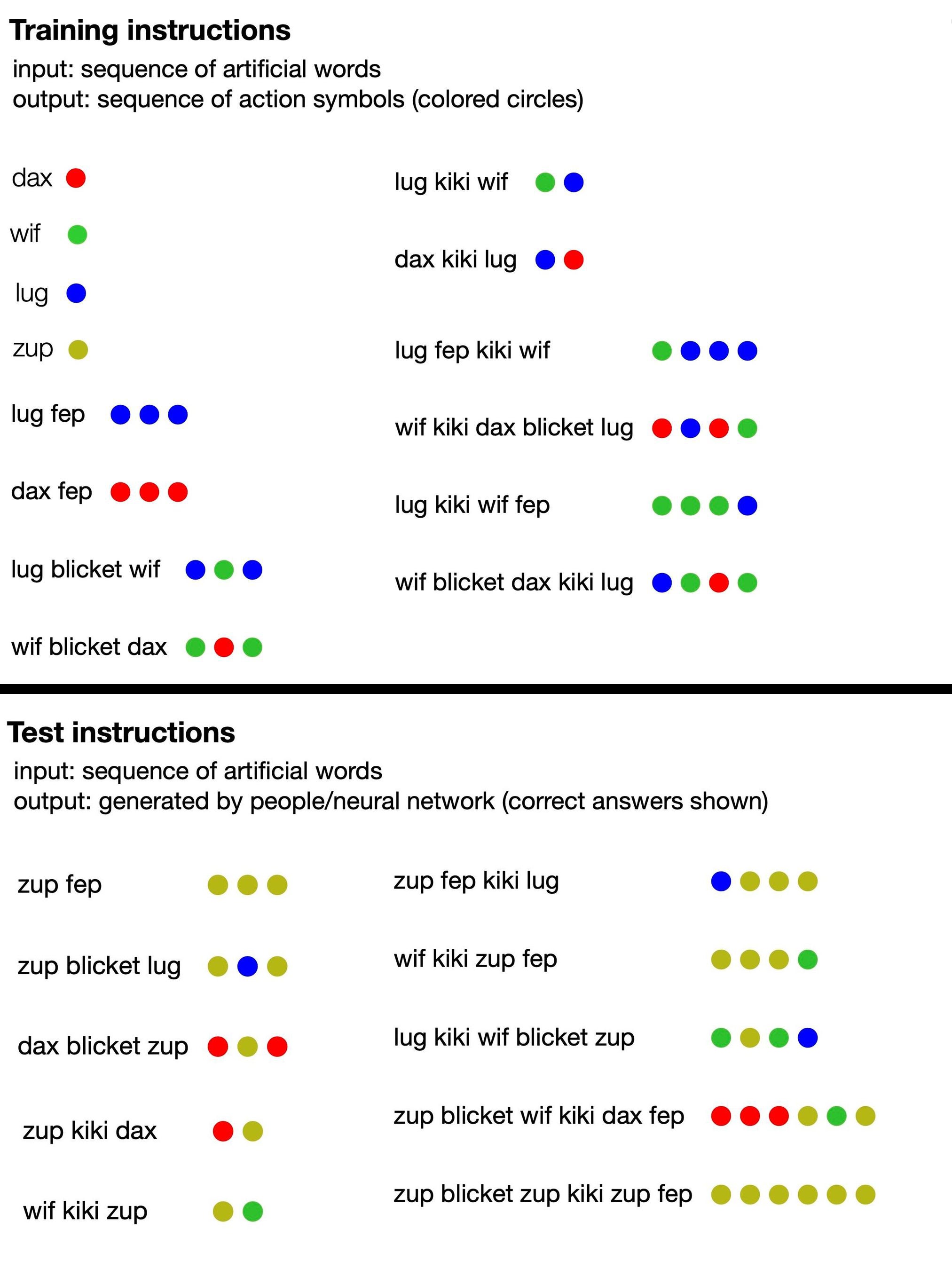

The language consists of nonsense phrases (similar to “dax”, “lug”, “kiki”, “fep” and “blicket”) that are “translated” into units of coloured dots. A few of these modern phrases had been code phrases that immediately represented dots of a selected shade, whereas others referred to capabilities that modified the order or variety of dot outputs. For instance, dax represented a easy crimson dot, however fep was a perform that, when mixed with dax or another image phrase, multiplied the product of its corresponding dot by three. So “dax fep” will translate to 3 crimson dots. Nonetheless, coaching the AI didn’t embody any of this data: the researchers merely fed the mannequin a number of examples of nonsense sentences mixed with corresponding units of factors.

From right here, the research authors had the mannequin produce its personal collection of dots in response to new phrases, and rated the AI primarily based on whether or not it accurately adopted the implicit guidelines of the language. The neural community was quickly capable of reply coherently, following the logic of the nonsense language, even when introduced with new phrase configurations. This implies that it might probably “perceive” the unreal guidelines of language and apply them to untrained phrases.

Moreover, the researchers examined the educated AI mannequin’s understanding of made-up language towards 25 human contributors. They discovered that their improved neural community, at its finest, responded with 100% accuracy, whereas human solutions had been appropriate about 81% of the time. (When the crew fed GPT-4 coaching prompts for the language after which requested it take a look at questions, the big language mannequin was solely 58 % correct.) With further coaching, the researchers’ normal Transformer mannequin started to imitate human reasoning effectively. They made the identical errors: for instance, human contributors typically made the error of assuming a one-to-one relationship between particular phrases and factors, though most of the statements didn’t observe this sample. When the mannequin was fed examples of this conduct, it shortly started to repeat it and made the error with the identical frequency as people.

The mannequin’s efficiency is especially spectacular, given its small dimension. “This isn’t an enormous language mannequin educated solely on the Web; “This can be a comparatively small adapter that has been educated for these duties,” says Armando Photo voltaic Lezama, a pc scientist at MIT, who was not concerned within the new research. “It was attention-grabbing to see that he was nonetheless capable of provide these sorts of generalizations.” This discovering means that moderately than merely pushing extra coaching knowledge into machine studying fashions, a complementary technique could be to supply AI algorithms with the equal of a centered class in linguistics or algebra.

Photo voltaic-Lizama says this coaching methodology might theoretically present another path to enhancing AI. “When you feed a mannequin fully on-line, there will probably be no different web to feed it for additional enchancment. “So I feel methods that power fashions to assume higher, even on artificial duties, can have an effect sooner or later,” he says – with The caveat is that there could also be challenges to scaling up the brand new coaching protocol.On the similar time, Photo voltaic-Lesama believes that such research on smaller fashions assist us higher perceive the “black field” of neural networks and will make clear the so-called rising capabilities of programs Larger AI.

Smolinsky provides that this research, together with comparable analysis sooner or later, may additionally advance people’ understanding of our minds. This might assist us design programs that cut back our species’ error-prone tendencies.

However for now, these advantages stay hypothetical, and there are some huge limitations. “Regardless of its successes, their algorithm doesn’t clear up all of the challenges at hand,” says Ruslan Salakhutdinov, a pc scientist at Carnegie Mellon College, who was not concerned within the research. “It doesn’t robotically take care of impractical types of generalization.” In different phrases, the coaching protocol helped the mannequin excel at one sort of activity: studying patterns in a pretend language. However given a very new activity, he could not apply the identical ability. This was evident in normal exams, the place the mannequin didn’t handle longer sequences and was unable to know “phrases” that had not been beforehand introduced.

Most significantly, each professional American Scientific The researchers famous {that a} neural community able to restricted generalization may be very totally different from the holy grail of synthetic basic intelligence, the place pc fashions exceed human potential at most duties. “It is arguably a really, very, very small step in that route,” Photo voltaic-Lizama says. “However we’re not speaking about AI gaining capabilities by itself.”

From the restricted interactions with AI chatbots, which may give the phantasm of hyperefficiency, and the widespread hype, many individuals might have exaggerated concepts in regards to the capabilities of neural networks. “Some individuals would possibly discover it shocking that it is so tough for programs like GPT-4 to do this sort of linguistic generalization activity out of the field,” Photo voltaic-Lizama says. Though the brand new research’s findings are thrilling, they might function an unintentional actuality verify. “It is actually essential to trace what these programs can do, and likewise what they can not do,” he says.

(tags for translation) Machine studying